ISO/TS 30437:2023

(Main)Human resource management — Learning and development metrics

Human resource management — Learning and development metrics

This document provides recommendations on how to measure learning. Since the selection of metrics depends on the reason to measure and the user of the metrics, and since a balanced set of metrics is important to avoid unintended consequences, the document begins with a framework for organizational learning and development (L&D), including five categories of users, four broad reasons to measure and three types of metrics. This framework is then used to recommend 50 metrics organized by user, type of metric and size of organization, and provide a description of each. The document concludes with guidance on reporting metrics, including a description of the different types of reports and guidance on their selection based on the user’s reasons for measuring. Metrics for both formal and informal learning are included. The guidance is intended for all types of organizations, including commercial and nonprofit, as well as for all sizes. No previous knowledge of L&D metrics is required, although those new to L&D measurement can consult the suggested references on matters of frameworks, metrics and programme evaluation to learn more.

Management des ressources humaines — Indicateurs d’apprentissage et de développement

Le présent document fournit des recommandations relatives à la façon de mesurer l’apprentissage. Comme la sélection d’indicateurs dépend du motif de réalisation des mesures et de l’utilisateur des indicateurs, et qu’il est important d’utiliser un ensemble équilibré d’indicateurs pour éviter toutes conséquences imprévues, le document commence par établir un cadre pour l’apprentissage et le développement (A&D) au sein des organisations, qui comprend cinq catégories d’utilisateurs, quatre grands motifs de réalisation de mesures et trois types d’indicateurs. Ce cadre sert ensuite à recommander 50 indicateurs selon l’utilisateur, le type d’indicateur et la taille de l’organisation, et à fournir une description de chacun d’eux. Le document se termine par des recommandations relatives à l’établissement de rapports pour présenter les indicateurs, ce qui inclut une description des différents types de rapports et des recommandations relatives à leur sélection en fonction des raisons qui incitent l’utilisateur à réaliser des mesures. Des indicateurs d’apprentissage aussi bien formel qu’informel sont proposés. Les recommandations sont destinées à tout type d’organisation, qu’elles aient ou non un but lucratif, et quelle que soit leur taille. Aucune connaissance des indicateurs d’A&D n’est nécessaire, même si les néophytes en mesure d’A&D peuvent consulter les références proposées relatives aux enjeux des cadres, des mesures et de l’évaluation de programmes pour en savoir plus.

General Information

Standards Content (Sample)

TECHNICAL ISO/TS

SPECIFICATION 30437

First edition

2023-06

Human resource management —

Learning and development metrics

Management des ressources humaines — Indicateurs d’apprentissage

et de développement

Reference number

© ISO 2023

All rights reserved. Unless otherwise specified, or required in the context of its implementation, no part of this publication may

be reproduced or utilized otherwise in any form or by any means, electronic or mechanical, including photocopying, or posting on

the internet or an intranet, without prior written permission. Permission can be requested from either ISO at the address below

or ISO’s member body in the country of the requester.

ISO copyright office

CP 401 • Ch. de Blandonnet 8

CH-1214 Vernier, Geneva

Phone: +41 22 749 01 11

Email: copyright@iso.org

Website: www.iso.org

Published in Switzerland

ii

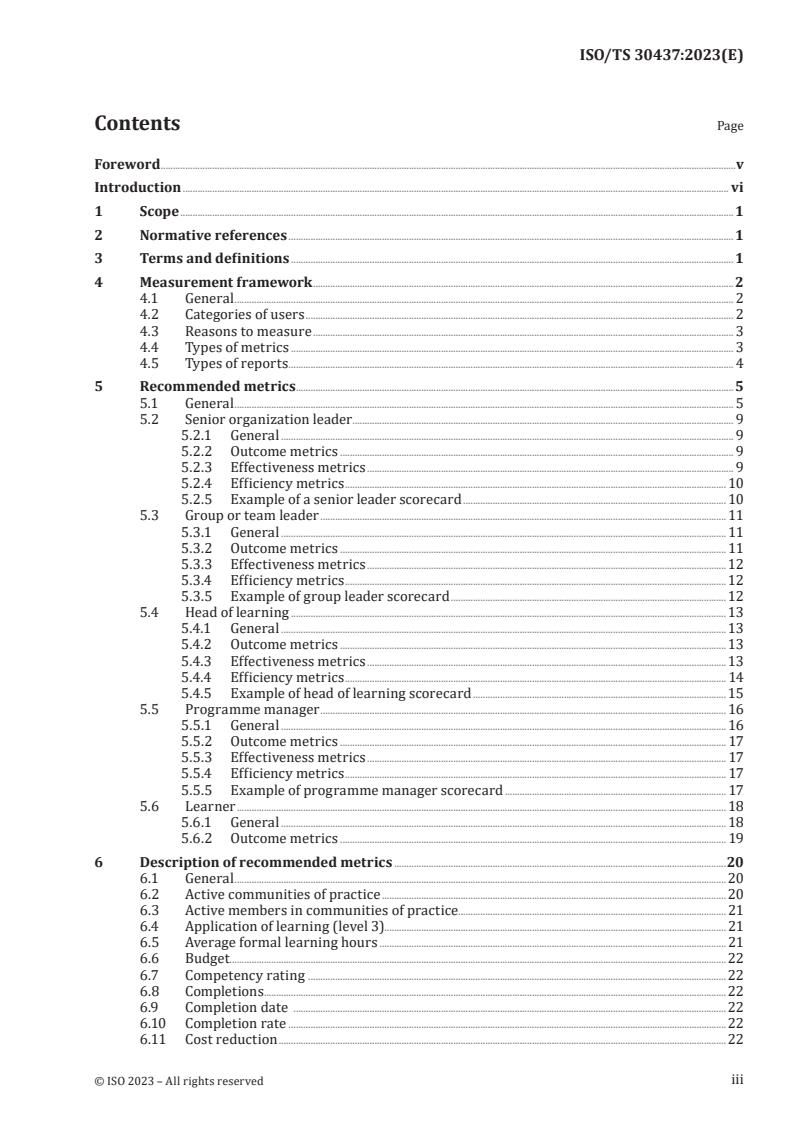

Contents Page

Foreword .v

Introduction . vi

1 Scope . 1

2 Normative references . 1

3 Terms and definitions . 1

4 Measurement framework .2

4.1 General . 2

4.2 Categories of users . 2

4.3 Reasons to measure . 3

4.4 Types of metrics . 3

4.5 Types of reports . 4

5 Recommended metrics . .5

5.1 General . 5

5.2 Senior organization leader . 9

5.2.1 General . 9

5.2.2 Outcome metrics . 9

5.2.3 Effectiveness metrics . 9

5.2.4 Efficiency metrics . 10

5.2.5 Example of a senior leader scorecard . 10

5.3 Group or team leader . 11

5.3.1 General . 11

5.3.2 Outcome metrics . 11

5.3.3 Effectiveness metrics .12

5.3.4 Efficiency metrics .12

5.3.5 Example of group leader scorecard .12

5.4 Head of learning . 13

5.4.1 General .13

5.4.2 Outcome metrics .13

5.4.3 Effectiveness metrics .13

5.4.4 Efficiency metrics . 14

5.4.5 Example of head of learning scorecard . 15

5.5 Programme manager . 16

5.5.1 General . 16

5.5.2 Outcome metrics . 17

5.5.3 Effectiveness metrics . 17

5.5.4 Efficiency metrics . 17

5.5.5 Example of programme manager scorecard . 17

5.6 Learner . 18

5.6.1 General . 18

5.6.2 Outcome metrics . 19

6 Description of recommended metrics .20

6.1 General . 20

6.2 Active communities of practice . 20

6.3 Active members in communities of practice. 21

6.4 Application of learning (level 3). 21

6.5 Average formal learning hours . 21

6.6 Budget.22

6.7 Competency rating . 22

6.8 Completions . . 22

6.9 Completion date . 22

6.10 Completion rate .22

6.11 Cost reduction . 22

iii

6.12 Courses available . 23

6.13 Courses used . .23

6.14 Documents accessed . .23

6.15 Documents available .23

6.16 FTE in L&D .23

6.17 Job performance . 23

6.18 Impact (level 4) . 24

6.19 Learning (level 2) . 24

6.20 Objective owner satisfaction (level 1) . 24

6.21 Objective owner expectations met . 25

6.22 Participant reaction (level 1) . .25

6.23 Percentage of courses available by type of learning . . 25

6.24 Percentage of employees reached by learning . 25

6.25 Percentage of employees who have completed training on compliance and ethics .26

6.26 Percentage of employees who participate in formal learning . 26

6.27 Percentage of employees who participate in formal learning by category .26

6.28 Percentage of employees who participate in informal learning . 26

6.29 Percentage of employees with individual development plans . 26

6.30 Percentage of employees using the learning portal . 26

6.31 Percentage of leaders who have participated in leadership development

programmes . . . 27

6.32 Percentage of leaders who have participated in training . 27

6.33 Percentage of courses developed on time . 27

6.34 Percentage of courses delivered on time . 27

6.35 Performance support tools available. 27

6.36 Performance support tools used .28

6.37 Reach .28

6.38 Results (level 4) .28

6.39 Return on investment (level 5) .28

6.40 Target audience .28

6.41 Total cost (organization) .29

6.42 Total documents accessed . 29

6.43 Total hours used .29

6.44 Total participants . .29

6.45 Total performance support tools used .29

6.46 Unique employees in L&D . 30

6.47 Unique hours available .30

6.48 Unique hours used . 30

6.49 Unique participants.30

6.50 Unique performance support tool users .30

6.51 User satisfaction with learning portal (or content) .30

6.52 User satisfaction with communities of practice . 31

6.53 User satisfaction with performance support tools . 31

6.54 Unique users of content . 31

6.55 Utilization rate . 31

6.56 Workforce competency rate . 31

7 Reporting of metrics .31

7.1 General . 31

7.2 Senior organization leaders . 32

7.3 Group or team leaders . 32

7.4 Head of learning . 32

7.5 Programme managers . 32

7.6 Individuals . 32

Annex A (informative) Examples of reports .33

Annex B (informative) Participant estimation methodology .40

Bibliography .42

iv

Foreword

ISO (the International Organization for Standardization) is a worldwide federation of national standards

bodies (ISO member bodies). The work of preparing International Standards is normally carried out

through ISO technical committees. Each member body interested in a subject for which a technical

committee has been established has the right to be represented on that committee. International

organizations, governmental and non-governmental, in liaison with ISO, also take part in the work.

ISO collaborates closely with the International Electrotechnical Commission (IEC) on all matters of

electrotechnical standardization.

The procedures used to develop this document and those intended for its further maintenance are

described in the ISO/IEC Directives, Part 1. In particular, the different approval criteria needed for the

different types of ISO document should be noted. This document was drafted in accordance with the

editorial rules of the ISO/IEC Directives, Part 2 (see www.iso.org/directives).

ISO draws attention to the possibility that the implementation of this document may involve the use

of (a) patent(s). ISO takes no position concerning the evidence, validity or applicability of any claimed

patent rights in respect thereof. As of the date of publication of this document, ISO had not received

notice of (a) patent(s) which may be required to implement this document. However, implementers are

cautioned that this may not represent the latest information, which may be obtained from the patent

database available at www.iso.org/patents. ISO shall not be held responsible for identifying any or all

such patent rights.

Any trade name used in this document is information given for the convenience of users and does not

constitute an endorsement.

For an explanation of the voluntary nature of standards, the meaning of ISO specific terms and

expressions related to conformity assessment, as well as information about ISO's adherence to

the World Trade Organization (WTO) principles in the Technical Barriers to Trade (TBT), see

www.iso.org/iso/foreword.html.

This document was prepared by Technical Committee ISO/TC 260, Human resource management.

Any feedback or questions on this document should be directed to the user’s national standards body. A

complete listing of these bodies can be found at www.iso.org/members.html.

v

Introduction

A well-conceived measurement and reporting strategy is necessary to ensure organizational and

individual development processes are managed efficiently and effectively to produce the desired

outcomes. This document provides a framework and the concepts, metrics, descriptions and guidance

necessary to create a basic measurement and reporting strategy.

ISO 30422 provides guidance on a systematic process model for learning and development (L&D) to

help managers and others ensure that L&D occurs in the most efficient and effective way to deliver

intended outcomes. While it includes a clause on evaluation, describing the reasons to measure and

the benefits expected to accrue from measurement, it does not include recommendations for specific

metrics or provide guidance on definitions, purpose or use.

ISO 30422 identifies the need to address both individual and organizational outcomes as well as the

efficiency and effectiveness of the L&D programmes (see ISO 30422:2022, Figure 1).

Figure 1 — Learning and development process

vi

This document follows that guidance by focusing on three types of metrics (efficiency, effectiveness

and outcome) deployed over five categories of user (senior organization leader, group or team leader,

head of learning, programme manager and individual) to measure learning. This framework is used to

provide specific guidance on how to measure L&D, including recommended metrics by user and by size

of organization. A list of recommended metrics and an example of their use in a scorecard are provided

for each user. In total, more than 50 metrics for formal and informal learning are described, including

formulae and worked-out examples where appropriate. Guidance is also provided for selecting the most

appropriate report to share the metrics. Four types of reports are described and illustrated by example,

including scorecards, dashboards, programme evaluation reports and management reports.

NOTE Small-to-medium organizations will possibly not have a dedicated learning department or head of

learning. Instead, there could be one or more employees throughout the organization with responsibility for

learning.

This document also incorporates guidance from ISO 30414. All eight of the learning-related metrics

from ISO 30414 are included.

Detailed guidance on the limited number of learning-related metrics from ISO 30414 can be found in

ISO/TS 30428. The L&D metrics described in ISO/TS 30428 are included in this document but greater

detail is provided in ISO/TS 30428.

vii

TECHNICAL SPECIFICATION ISO/TS 30437:2023(E)

Human resource management — Learning and

development metrics

1 Scope

This document provides recommendations on how to measure learning. Since the selection of metrics

depends on the reason to measure and the user of the metrics, and since a balanced set of metrics is

important to avoid unintended consequences, the document begins with a framework for organizational

learning and development (L&D), including five categories of users, four broad reasons to measure and

three types of metrics. This framework is then used to recommend 50 metrics organized by user, type

of metric and size of organization, and provide a description of each. The document concludes with

guidance on reporting metrics, including a description of the different types of reports and guidance on

their selection based on the user’s reasons for measuring.

Metrics for both formal and informal learning are included. The guidance is intended for all types of

organizations, including commercial and nonprofit, as well as for all sizes. No previous knowledge of

L&D metrics is required, although those new to L&D measurement can consult the suggested references

on matters of frameworks, metrics and programme evaluation to learn more.

2 Normative references

The following documents are referred to in the text in such a way that some or all of their content

constitutes requirements of this document. For dated references, only the edition cited applies. For

undated references, the latest edition of the referenced document (including any amendments) applies.

ISO 30400, Human resource management — Vocabulary

ISO 30414, Human resource management — Guidelines for internal and external human capital reporting

ISO 30422:2022, Human resource management — Learning and development

3 Terms and definitions

For the purposes of this document, the terms and definitions given in ISO 30400, ISO 30414 and

ISO 30422 and the following apply.

ISO and IEC maintain terminology databases for use in standardization at the following addresses:

— ISO Online browsing platform: available at https:// www .iso .org/ obp

— IEC Electropedia: available at https:// www .electropedia .org/

3.1

programme

course or series of courses with similar learning objectives designed to accomplish an organizational

objective or need

Note 1 to entry: Programmes can include different types of learning, such as instructor-led, e-learning and

informal learning. For example, a programme to improve leadership could begin with some e-learning to convey

basic concepts, continue with an instructor-led course to discuss and role-play and end with the provision of

performance support tools and coaching.

4 Measurement framework

4.1 General

In the case of L&D, there are multiple users of the metrics, multiple reasons to measure, more than 100

[9]

possible metrics and multiple ways to share the selected metrics. This document shares one possible

framework to facilitate understanding, selection and reporting of metrics. It also provides a common

basis for communication. The purpose of this framework is to make it easier to select, report and use

L&D metrics.

4.2 Categories of users

Measurement selection starts with the identification of users and the category of data aggregation

required by those users. This answers the question, “Who will use these metrics and what level of data

aggregation is required?”

Five categories of users are suggested:

— Senior organization leader: This category of user includes the chief executive officer (CEO), chief

financial officer (CFO), head of human resources (HR), board of directors and objective owners (e.g.

the head of sales, who has an objective to increase sales by 10 %). Data are aggregated across the

organization. Recommended metrics include measures such as percentage of employees reached by

learning, percentage of employees with an individual development plan, total cost of learning and

contribution to outcomes.

— Group or team leader: This category of user includes heads of business units or other units within

an organization. Data are aggregated across a group or team, which can be either a cohort taking a

learning programme or a group of unrelated individuals. Recommended metrics include measures

such as the number of participants, number of courses, hours spent in learning and satisfaction with

the learning.

— Head of learning: The head of learning in a large organization is typically a full-time position with

responsibility for all or most of the organization’s learning. In a small organization, the head of

learning can be the full-time or part-time person with responsibility for L&D. Data are aggregated

across the organization but used only by the head of learning and other senior L&D leaders. Examples

include metrics that are not of interest to the CEO but are managed at the department level (e.g.

percentage of courses completed on time, percentage of online content that is utilized, mix of virtual

versus in-person learning and percentage of informal versus formal learners).

— Programme manager: The programme manager is the person responsible for a specific learning,

training or development programme. The data are focused on individual programmes and not

aggregated across multiple programmes. The programme manager can use these data to manage

the programme on a daily or weekly basis to deliver planned results. Recommended metrics include

measures such as number of participants, completion rates, completion dates, application rates and

outcomes.

— Learner: The individual learner is also a consumer of learning data. In this case, the data are unique

to each learner. Recommended metrics include measures such as number of offerings available,

informal learning opportunities and competency assessments.

The recommended metrics in Clause 5 are organized by these five categories.

4.3 Reasons to measure

The next step is to identify the reasons to measure, which influence the selection and reporting of

metrics. There are many specific reasons to measure but four broad categories can be employed to

[9]

group these:

— Inform: Many users want a question answered. For example, “How many courses are offered?” or

“How many employees have taken at least one offering?” Others want to know if there are trends in

the data. For example, “Is usage trending up?” or “Is virtual learning gaining share?”

— Monitor: Users want to know if the value of a metric remains within a historically acceptable range.

This implies that the value of the metric is currently or has recently been acceptable and the objective

is to ensure it remains so. For example, “Does monthly participant satisfaction with learning remain

above 80 % favourable?” In contrast to “inform”, users have an opinion about the desired value of

a metric and are prepared to take corrective action if the value does not remain within the desired

range;

— Evaluate: Users want to know if the programme was efficient and effective and if the desired

organizational outcome was achieved. A post-programme review could uncover opportunities

for continual improvement or could indicate further work is required. Programme evaluation is

the focus of most books and articles on the measurement of learning. The Kirkpatrick and Phillips

approaches are two of the most commonly employed.

— Manage: Users want to use metrics to actively manage their programmes to deliver planned results

which are an improvement on what has been achieved in the past. The objective to improve the value

of a measure is what distinguishes managing from monitoring, where the objective was to keep the

value in the range it has been in the past. Managing requires setting specific, measurable targets

for each key metric upfront and then comparing actual results to plan each month to determine if

corrective action is necessary. This purpose requires special reports.

While much of the literature on L&D measurement is focused on programme evaluation, most L&D

measurement and reporting activity is focused on informing and monitoring. Programme evaluation

requires a higher level of analytical capability, often including statistics, while measuring to manage

requires the highest level of analysis and management capability.

The reasons to measure should be identified at the beginning of the measurement period for each user.

This helps to ensure that there is agreement on the measurement strategy by all parties. It also helps

to ensure that the most appropriate metrics are selected and that the metrics are shared in the most

appropriate type of report. (4.5 describes the different types of reports and Clause 7 sets out the most

appropriate type of report for each reason to measure.)

4.4 Types of metrics

Three categories of metrics (efficiency, effectiveness and outcome) have been suggested by various

[3,4,6,9]

authors and are used for this framework. The three types are applicable to all categories of users

and all organization types and sizes. Categorizing the metrics is important to ensure the selection of a

balanced set of metrics.

— Efficiency: These are quantity metrics such as number of courses or learners, costs, utilization rates

and percentage of employees actively involved with learning. Some efficiency metrics, for example,

a utilization rate or percentage on-time completion, require no further information to interpret: a

higher percentage is always better than a lower percentage. For most efficiency measures, however,

a comparison needs to be made to history, benchmark or plan to make a statement about efficiency.

Some efficiency metrics can be divided by another metric (e.g. cost per learner) and compared with

history, benchmark or plan to reach a conclusion about efficiency.

— Effectiveness: These are quality metrics that answer the question, “How good was the programme?”

Effectiveness metrics can help uncover issues with learning design, content or delivery, as well

[5] [7]

as application. Adopting the five levels from Kirkpatrick and Phillips, effectiveness metrics

include the participant’s reaction to the programme (level 1), the amount learned (level 2), the

degree of application on the job (level 3) and the return on investment (level 5). A programme is not

considered effective if participants react poorly to it, learn little, fail to apply what they learned or

if the programme's cost exceeds its benefit.

[5]

— Outcome (level 4): The Kirkpatrick approach calls level 4 “results” and refers to the change in the

organizational metric targeted by the learning. For example, if a learning programme is designed

to increase sales, then results are the increase in sales (e.g. 5 %). The Kirkpatrick approach focuses

on making a correlation between the learning and the organizational objective, seeking to show a

compelling chain of evidence that learning contributed to the results and met expectations. The

[7]

Phillips approach calls level 4 “impact”, which is defined as the isolated impact of learning on the

organizational goal. For example, if a learning programme contributed 20 % of the 5 % increase in

sales, then the impact of learning on sales would be 1 % higher sales (20 % × 5 % = 1 %). Annex B

illustrates the most common method of isolation. Outcome metrics are always tied directly to the

reason for the learning programme (e.g. increase sales or reduce injuries).

NOTE 1 Since the Kirkpatrick approach does not isolate the impact of learning, there is no measure of

isolated impact. Instead, results reflect the change in the organizational objective, which could also be due

to factors other than learning. Since isolated impact is not available with the Kirkpatrick approach, return

on investment (ROI) can only be calculated using the Phillips approach.

NOTE 2 Not all learning programmes are designed to directly improve organizational outcomes and

therefore do not have an organizational learning outcome measure. All programmes, however, do have a

learner outcome measure.

In this framework, the majority of L&D metrics are efficiency measures. Some add a fourth type of

metric called economy, which is cost and is captured under efficiency in the framework described.

4.5 Types of reports

The final element in a measurement strategy is the reporting, which is how most users actually receive

their learning metrics. The recommended report is based on the user and their reasons to measure.

There are four basic types of reports employed to convey the values of the selected learning metrics:

— Scorecards: These are the traditional reports for L&D, which look like a table and contain many cells

of data. Typically, metrics are shown in the rows and the time period (months, quarters or years) is

shown as the column heading.

— Dashboards: These reports typically include more aggregated data (e.g. year-to-date totals) and

fewer numbers than scorecards but instead include visual displays (bar or line graphs or pie charts).

Dashboards can also be interactive so that displays are updated automatically as the numbers

change. Unlike scorecards there is no standard format.

— Programme evaluation reports: These are special purpose reports used to share the results of a

completed programme or pilot. They follow a common structure, such as need for the programme,

expectations and planned outcomes, summary of implementation, comparison of actual results

to plan, summary of results and suggestions for further improvement. Unlike scorecards and

dashboards, programme evaluation reports are typically presentations or written documents.

— Management reports: These reports are specifically designed to help programme managers manage

their programmes and help the head of learning manage the department. These reports have a

common format, which is similar to the format used by the sales and manufacturing departments.

Columns typically include last year’s results, plan for this year, year-to-date (YTD) results, YTD

results compared with plan, forecast for the year and forecast compared with plan.

NOTE There are three types of management reports. The programme report is used by the programme

manager and head of learning to manage a programme. The operations management report is used by the

head of learning to manage measures selected for improvement. The summary management report is used

by the head of learning to brief senior organization leaders and manage at a high level.

Examples are provided in Annex A.

5 Recommended metrics

5.1 General

This clause sets out the recommended metrics to measure learning, organized by user, type of measure

and organization size. While there is no universal definition of small, medium or large organizations,

these categories are sometimes defined by the recognized authority within the country or region.

Tables 1 and 2 provide a summary of all the recommended metrics. In recognition of the ability of larger

organizations to report more metrics, many more metrics are recommended for large organizations

than for small and medium organizations.

A detailed description is provided for each metric in alphabetical order in Clause 6. Most metrics can

be analysed at an aggregate level and also segmented by business unit, region, gender, race, type of

employee or other category of interest.

Table 1 — Recommended metrics for large organizations

User

Pro-

Senior Group Head of gramme

leader leader learning manag- Learn-

Type of metric Metric name er er

Impact of learning (level 4) x x x x

Outcome met-

Workforce competency rate x x x x

rics

Individual competency x

Efficiency

metrics

Unique participants x x x x

Total participants x x x

Percentage of employees reached by learning x x x

All learning

Percentage of employees with individual devel-

opment plans x x

Total cost x x x x

Existence of individual development plan x

NOTE Italics indicate metric recommended by ISO 30414 and detailed in ISO/TS 30428.

TTabablele 1 1 ((ccoonnttiinnueuedd))

User

Pro-

Senior Group Head of gramme

leader leader learning manag- Learn-

Type of metric Metric name er er

Average formal training hours x x x

Percentage of employees who participated in

formal learning x

Percentage of employees who participate in

training by category x x

Percentage of employees who have completed

training on compliance and ethics x x

Percentage of leaders who have participated in

training x x

Percentage of leaders who have participated in

leadership development x x

Completion rate x x x x

Completion date x x

Hours used x

Courses used x x

Formal learning

Unique employees in L&D x x

Full-time equivalent (FTE) in L&D x x

Percentage of courses available by type of

learning x

Percentage of courses used by type of learning x

Percentage of courses developed on time x

Percentage of courses delivered on time x

Courses available x

Courses used x x

Unique hours available x

Unique hours used x

Total hours used x x

Utilization rate (instructors, classrooms) x

Percentage of employees reached by informal

learning x

Unique users of online content available

through the organization's portal or repository x

Unique documents available x

Unique documents used x x

Total documents accessed x

Informal learn-

Percentage of employees using the portal x

ing

Communities of practice x x

Active communities of practice (CofP) x

Active CofP members x

Performance support tools available x

Performance support tools used x x

Unique performance support tools users x

NOTE Italics indicate metric recommended by ISO 30414 and detailed in ISO/TS 30428.

TT

...

Questions, Comments and Discussion

Ask us and Technical Secretary will try to provide an answer. You can facilitate discussion about the standard in here.

Loading comments...